Natural Language Processing and Machine Learning for Information Retrieval

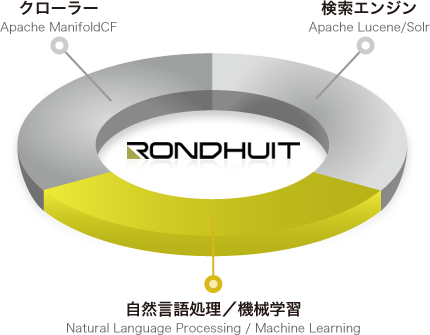

RONDHUIT introduces these technologies in various area to provide you with a better search system.

RONDHUIT introduces these technologies in various area to provide you with a better search system.

What is Better Search System?

What is RONDHUIT’s idea of “better search system”? We think that a system that can promptly provide information that users want is “better search system”. Extremely simple, isn’t it? However, to your surprise, it is hard to come by.

Why is it so difficult? Because you need to seek for two conflicting interests at the same time.

The first interest is to “return every information that users want without leakage”. If there is “search leakage,” users have to pick one or more keywords and continue searching, resulting in extra time to get to the information you want.

The second interest is “return only the information users want”. If the search results include redundant information that a user doesn’t want (called “search error”), the user have to click documents in the search results to confirm the content of each document: another factor leading to extra time.

It is known that pursuing these 2 interest is in the relation of the trade-off. Tuning the system to minimize “search leakage” in order to pursue the first interest makes search results list longer, resulting in more “search errors”. On the contrary, tuning the search system to minimize “search errors” in order to pursue the second interest decreases search results list, resulting in more “search leakage”.

However, in order to provide you with “better search system” that RONDHUIT believes, we need to pursue these interest concurrently. OK. What should be done? In order to solve the problem, we have introduced the both natural language processing and machine learning technologies. Let me quickly introduce how we accomplish this.

How Can We Search without Leakage?

In general, decreasing “search leakage” can be accomplished with the following methods.

- Using character N-gram.

- Character normalization.

- Applying synonym dictionary.

The above functions are all provided by Apache Lucene/Solr. “Using character N-gram” corresponds to setting up appropriate Analyzer. “character normalization” and “applying synonym dictionary” correspond to setting up each dictionary. “Character normalization” uses a dictionary to absorb spelling inconsistency. For example, one-byte/two-byte character, New and old Chinese characters, etc. “Applying synonym dictionary” defines keywords such as “首相” and “内閣総理大臣” are the same in the dictionary so that searching either of the keyword hits documents.

Apache Lucene/Solr can set up these spelling inconsistency dictionary and only you have to do to decrease “search leakage” is to prepare a dictionary. We can somehow manually maintain dictionary for “character normalization” as characters are finite. However, “applying synonym dictionary” cannot be done that way. Synonym dictionary has a limitation in manual maintenance because words (keyword) are generated every day.

Well, would purchasing dictionaries from dictionary vendors solve the problem? Yes, that’s one solution. Those dictionaries, however, contain a lot of nonprofessional general terms that would not necessarily satisfy your business domain and could end up buying a white elephant. What we suggest here is automatically outputting synonym dictionary from “text data” (we call this “corpus”) that you generate from everyday business transaction and maintaining the missing part manually. For this reason, we use natural language processing and machine learning.

The method mentioned above can decrease “search leakage”. Meanwhile, “search error ” which is in the relation of the trade-off will come to increase. Next, we will look at a way to decrease “search error” that came to increase.

How Can We Search Only What We Need?

In general, to decrease “search error,” we could go for “Using Morphological Analyzer” instead of “Using Character N-gram”. Now, we have to find a way to decrease “search error” that has increased as a result of decreasing “search leakage”. As it is impossible to solve these problems concurrently, we suggest that we will decrease “search error” – that is improving search precision – with the following method.

- Making the Most of Facet and Filtered Search That Apache Solr Offer

- Ranking Tuning

The first method is to use an Apache Solr function “facet” to gradually refine the search result to match user’s needs. For this, however, the search target document has to be structured. In the real world, however, there are so many unstructured documents that you end up wasting Solr facet function. RONDHUIT, therefore, decided to save these unstructured documents by converting them to structured documents using natural language processing and machine learning. To be more precise, we take your text data, process keyword extraction and named entity extraction, and structure them while search indexing to make effective use of Solr functions.

The second method is to properly tweak ranking (display order of search results) to improve the search precision on the surface. Solr has a function to adjust the weight of field to statically control the ranking though it has its limitations. We, therefore, use “Learning-to-Rank” that applied machine learning framework to estimate ranking. The learning-to-rank is a supervised learning that uses training data that has correct labels. Collecting the training data in general is a costly process but RONDHUIT’s subscription package will provide you with impression log output and training data create function by click model that are required for collecting learning-to-rank training data, enabling you to create learning-to-rank model at low cost and save trouble.

Summary: Making the Most of Big Data for Better Search System

To wrap up, RONDHUIT will make the most of your big data in order to provide you with a better search system, and we use natural language processing and machine learning to realize these objective.

| Objective | Functions/Tools | Your Data to be Used |

|---|---|---|

| Decrease search leakage | Auto-generation of synonym dictionary | Your business text data. It usually is equal to search target document and you do not particularly need to prepare it for us. |

| Decrease search error | Keyword extraction. Named entity extraction | |

| Learning-to-rank, Click model | Impression log |